Recently, during students projects defense in École Polytechnique, a colleague asked if one knows nice elementary facts on the smallest eigenvalue of the adjacency matrix of a finite graph, for instance in relation with positivity. This reminded me some pretty old personal notes written in 2006 and never published. They form the matter of this post.

This post is devoted to the following simple fact: up to a permutation of the coordinates, the symmetric semi-definite positive matrices with entries in \( {\{0,1\}} \) are block diagonal with diagonal blocks full of ones. We provide a simple proof of this result, and we connect it with the spectrum of finite simple graphs, and with Gaussian graphical models in Statistics.

Binary matrices. Let us denote by \( {\mathcal{S}_n^+} \) the set of \( {n\times n} \) real symmetric matrices with spectrum in \( {[0,+\infty)} \), by \( {\mathcal{S}_n^{+*}} \) the set of \( {n\times n} \) real symmetric matrices with spectrum in \( {(0,+\infty)} \), and by \( {\mathcal{P}_n} \) the set of \( {n\times n} \) permutation matrices: \( {P\in\mathcal{P}_n} \) iff there exists a permutation \( {\sigma} \) of \( {\{1,\ldots,n\}} \) such that \( {P_{j,k}=\mathbf{1}_{j=\sigma(i)}} \) for all \( {1\leq j,k\leq n} \).

Theorem 1 (Positivity of Boolean matrices) If \( {C} \) is a symmetric \( {n\times n} \) matrix with entries in \( {\{0,1\}} \) and unit diagonal, then \( {C\in\mathcal{S}_n^+} \) if and only if there exists \( {P\in\mathcal{P}_n} \) such that \( {PCP^\top} \) is block diagonal with diagonal blocks full of \( {1} \).

Proof: The necessity of \( {C\in\mathcal{S}_n^+} \) is clear. Conversely, let us show that if \( {C\in\mathcal{S}_n^+} \) then \( {P C P^\top} \) is block diagonal with diagonal blocks full of \( {1} \), for some \( {P\in\mathcal{P}_n} \). The statement is true for \( {n=1} \) and \( {n=2} \). We proceed next by induction on \( {n} \). Assume that \( {C\in\mathcal{S}_{n+1}^+} \). Since \( {C_{1:n,1:n}\in\mathcal{S}_n^+} \), it follows by the induction hypothesis that

\[ Q C_{1:n,1:n}Q^\top \]

is block diagonal with diagonal blocks full of \( {1} \), for some \( {Q\in\mathcal{P}_n} \). It is then enough to show that if \( {i,j,k\in\{1,\ldots,n+1\}} \) are distinct indices with \( {n+1\in\{i,j,k\}} \) and \( {C_{i,j}=C_{j,k}=1} \), then \( {C_{i,k}=1} \). Now, since \( {C\in\mathcal{S}_{n+1}^+} \), we know that the \( {3\times 3} \) matrix \( {C_{J,J}} \) obtained from \( {C} \) by its restriction to the block of three indices \( {J:=\{i,j,k\}} \) belongs to \( {\mathcal{S}_3^+} \). But if \( {C} \) is a \( {3\times 3} \) symmetric matrix, with unit diagonal, then \( {C_{1:2,1:2}=(1,1)^\top(1,1)} \) and \( {C\in\mathcal{S}_3^+} \) imply either that \( {C_{3,1:2}=(0,0)} \) or that \( {C_{3,1:2}=(1,1)} \). ☐

There is also an alternative probabilistic proof, based on the fact that \( {\mathcal{S}_n^+} \) coincides with the set of covariance matrices of gaussian random vectors of \( {\mathbb{R}^n} \). If \( {C\in\mathcal{S}_n^+} \), then \( {C} \) is the covariance matrix of a centered Gaussian random vector \( {Z} \) of \( {\mathbb{R}^n} \) with unit variances. Moreover,

\[ \mathbb{E}(Z_iZ_j)=C_{i,j}\in\{0,1\} \]

for any \( {1\leq i,j\leq n} \). Now, for any \( {1\leq i,j\leq n} \), we have that \( {\mathbb{E}(Z_iZ_j)=1} \) if and only if \( {Z_i=Z_j} \) a.-s. whereas \( {\mathbb{E}(Z_iZ_j)=0} \) if and only if \( {Z_i} \) and \( {Z_j} \) are independent. As a consequence, one can construct inductively a partition \( {I_1,\ldots,I_r} \) of \( {\{1,\ldots,n\}} \) such that \( {Z_i=Z_j} \) a.-s. for any \( {i,j\in I_k} \) and any \( {1\leq k\leq r} \), and such that the Gaussian random vectors \( {Z_{I_1},\ldots,Z_{I_r}} \) are mutually independent. It follows that the covariance matrix \( {C} \) of \( {Z} \) is block diagonal with diagonal blocks full of \( {1} \) up to a permutation of the coordinates \( {\{1,\ldots,n\}} \).

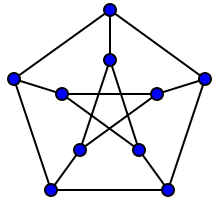

Links with finite simple graphs. For a positive integer \( {n} \), let us recall that a finite simple graph \( {G} \) with \( {n} \) vertices is a couple of the form \( {(V,E)} \) where \( {V:=\{1,\ldots,n\}} \) and where \( {E} \) is a symmetric off-diagonal subset of \( {V\times V} \). The elements of \( {V} \) and \( {E} \) are respectively the vertices and the edges of \( {G} \). The graph \( {G} \) is uniquely determined by its adjacency matrix matrix \( {A} \) defined by \( {A_{i,j}:=1} \) if \( {\{i,j\}\in E} \) and \( {A_{i,j}:=0} \) otherwise. There is a one to one correspondance between the set of finite simple graphs of \( {n} \) vertices and the set of \( {n\times n} \) symmetric matrices with entries in \( {\{0,1\}} \) and null diagonal. As a real symmetric matrix, the adjacency matrix \( {A} \) of a finite simple graph with \( {n} \) vertices has real eigenvalues. If \( {\mu} \) is the smallest eigenvalue of \( {A} \), then \( {\mu\leq-1} \) with equality if and only if the connected components of \( {G} \) are complete graphs, see for instance Doob and Dragos, Cvetkovic, Doob, and Sachs (th. 0.13 and 6.4). We give below an elementary proof of this result.

Corollary 2 Let \( {G=(V,E)} \) be a finite simple graph with \( {n} \) vertices and adjacency matrix \( {A} \) with smallest eigenvalue \( {\mu} \). If \( {\mu\geq-1} \), then either \( {\mu=0} \) and \( {E} \) is empty, or \( {\mu=-1} \) and the connected components of \( {G} \) are complete graphs.

Proof: We first notice that \( {\mu\geq-1} \) if and only if \( {C:=A+I_n\in\mathcal{S}_n^+} \). According to Theorem 1, \( {C\in\mathcal{S}_n^+} \) if and only if there exists \( {P\in\mathcal{P}_n} \) such that \( {PCP^\top} \) is block diagonal with diagonal blocks full of \( {1} \). Thus, either \( {PCP^\top=I_n} \) (and thus \( {\mu=0} \)), or \( {PCP^\top\in\mathcal{S}_n^+\setminus\mathcal{S}_n^{+*}} \) (and thus \( {\mu=-1} \)). ☐

Several aspects of finite simple graphs can be related to the spectrum of their adjacency matrix, as presented in the reference books Bollobas (VIII.2) and Dragos, Cvetkovic, Doob, and Sachs, and for instance in the articles Yong, Kelmans and Yong, Das and Kumar. The case of finite oriented graphs is in a way simpler, see for instance McKay, Oggier, Royle, Sloane, Wanless, and Wilf.

Links with Gaussian graphical models in Statistics. Let \( {G} \) be a simple finite graph with \( {n} \) vertices and adjacency matrix \( {A} \). Let \( {\mathcal{S}_n^{+*}(A)} \) be the set of matrices \( {K\in\mathcal{S}_n^{+*}} \) such that \( {K_{i,j}=0} \) if \( {A_{i,j}=0} \) for any \( {1\leq i\neq j\leq n} \).

Theorem 3 Let \( {X_1,\ldots,X_N} \) be a random sample of the centered Gaussian distribution \( {\mathcal{N}(0,K)} \) where \( {K\in\mathcal{S}_n^{+*}(A)} \). The empirical covariance matrix of the sample is

\[ \mathbb{X}:=\frac{1}{N}\sum_{k=1}^N X_k X_k^\top\in\mathcal{S}_n^+. \]

Assume that \( {A} \) is known. How to provide a “good” numerical estimate of \( {K} \), belonging to \( {\mathcal{S}_n^{+*}(A)} \), and computed from the sample?

Such estimation problems appear for instance in Gaussian covariance graph models, and in longitudinal data analysis, see for instance Chaudhuri and Drton and references therein. Recall that if \( {N>n} \), the symmetric matrix \( {\mathbb{X}} \) belongs almost-surely (a.-s.) to \( {\mathcal{S}_n^{+*}} \), see for instance Eaton and Perlman. Since we deal with a Gaussian sample, the random matrix \( {\mathbb{X}} \) follows actually a Wishart distribution, see for instance Anderson (p. 534).

Let us denote by \( {\mathcal{S}_n} \) the Hilbert space of symmetric \( {n\times n} \) real matrices, equipped with the dot product \( {U\cdot V:=\mathrm{Tr}(UV)} \). Let us also denote by \( {\mathcal{S}_n(A)} \) the set of symmetric \( {n\times n} \) matrices \( {M} \) such that \( {M_{i,j}=0} \) if \( {A_{i,j}=0} \), for any \( {1\leq i\neq j\leq n} \). We have then \( {\mathcal{S}_n^+(A)=\mathcal{S}_n(A)\cap\mathcal{S}_n^+} \) and \( {\mathcal{S}_n^{+*}(A)=\mathcal{S}_n(A)\cap\mathcal{S}_n^{+*}} \).

If \( {\circ} \) stands for the Schur-Hadamard product, the matrix \( {\mathbb{X}\circ (A+I_n)} \) is the orthogonal projection in \( {\mathcal{S}_n} \) of \( {\mathbb{X}} \) onto the hyperplane \( {\mathcal{S}_n(A)} \). The “naive estimator”

\[ \mathbb{X}\circ (A+I_n) \]

of \( {K} \) is obtained from \( {\mathbb{X}} \) by putting zeros at the entries prescribed by \( {A} \). It does not belong necessarily to \( {\mathcal{S}_n^{+*}(A)} \). We have however the following simple result.

Theorem 4 A.s. the orthogonal projection \( {p_{\mathcal{S}_n(A)}(\mathbb{X})=\mathbb{X}\circ(A+I_n) } \) of \( {\mathbb{X}} \) onto the hyperplace \( {\mathcal{S}_n(A)} \) belongs to the convex cone \( {\mathcal{S}_n^{+*}(A)} \) for large enough sample size \( {N} \). Moreover, \( {p_{\mathcal{S}_n(A)}(\mathbb{X})} \) is a strongly consistent and unbiased estimator of \( {K} \).

Proof: Let \( {C:=A+I_n} \). We have \( {\mathbb{E}(\mathbb{X}\circ C)=\mathbb{E}(\mathbb{X})\circ C=K\circ C=K} \). The law of large numbers ensures that \( {\lim_{N\rightarrow\infty}\mathbb{X}=K} \) a.-s and thus that \( {\lim_{N\rightarrow\infty}\mathbb{X}\circ C=K\circ C=K} \) a.-s. This gives the consistency and the zero bias. Notice that \( {\mathbb{X}\circ C\in\mathcal{S}_n(A)} \). Now, Since \( {K\in\mathcal{S}_n^{+*}(A)} \) and since \( {\mathcal{S}_n^{+*}(A)} \) is an open subset of the Hilbert space \( {\mathcal{S}_n(A)} \), we get \( {\mathbb{X}\circ C\in\mathcal{S}_n^{+*}(A)} \) for large enough \( {N} \), a.-s. ☐

In the result above, the threshold on the sample size \( {N} \) is random, and may vary from one sample to another. It also depends on \( {G} \) via \( {A} \). Recall that \( {\mathbb{X}\in\mathcal{S}_n^+} \), and moreover \( {\mathbb{X}\in\mathcal{S}_n^{+*}} \) a.-s. when \( {N>n} \). Unfortunately, one can have \( {p_{\mathcal{S}_n(A)}(M)\not\in\mathcal{S}_n^+} \) even if \( {M\in\mathcal{S}_n^{+*}} \). For instance, here is a counter example in dimension \( {n=3} \).

\[ M:=\left( \begin{array}{rrr} 4 & -3 & 3 \\ -3 & 4 & -3 \\ 3 &-3 & 4 \\ \end{array} \right) \in\mathcal{S}_n^{+*} \text{\quad but\quad} p_{\mathcal{S}_n(A)}(M)= \left( \begin{array}{rrr} 4 & -3 & 0 \\ -3 & 4 & -3 \\ 0 &-3 & 4 \\ \end{array} \right) \not\in\mathcal{S}_n^+. \]

However, this annoying phenomenon disappears if \( {A+I_n\in\mathcal{S}_n^+} \), as stated below.

Theorem 5 (Stability by projections) We have \( {p_{\mathcal{S}_n(A)}(\mathcal{S}_n^+)\subset\mathcal{S}_n^+} \) if and only if \( {A+I_n\in\mathcal{S}_n^+} \). Moreover, in that case, \( {p_{\mathcal{S}_n^+(A)}\equiv p_{\mathcal{S}_n(A)}} \) on \( {\mathcal{S}_n^+} \), and \( {p_{\mathcal{S}_n(A)}(\mathcal{S}_n^{+*})\subset\mathcal{S}_n^{+*}} \).

Thus, and according to Theorem 1, the naive estimator \( {\mathbb{X}\circ (A+I_n)} \) of \( {K} \) is good if and only if \( {K} \) is bloc diagonal up to a permutation of the coordinates. It is known in that case that the naive estimator coincides with the maximum likelihood estimator when \( {N>n} \).

Proof: } Set \( {C:=A+I_n} \) and let \( {1:=(1,\ldots,1)^\top(1,\ldots,1)} \) be the \( {n\times n} \) matrix full of ones. Let us establish the first part. If \( {C\in\mathcal{S}_n^+} \), then by the stability of \( {\mathcal{S}_n^+} \) by the Schur-Hadamard product (Schur’s Theorem, see Horn and Johnson (th. 7.5.3 p. 458), \( {p_{\mathcal{S}_n(C)}(M)= M\circ C\in\mathcal{S}_n^+} \) for any \( {M\in\mathcal{S}_n^+} \). Conversely, assume that \( {p_{\mathcal{S}_n(A)}(M)\in\mathcal{S}_n^+} \) for any \( {M\in\mathcal{S}_n^+} \). Then, \( {p_{\mathcal{S}_n(A)}(1)=1\circ C=C} \) since \( {1} \) is the neutral element of the Schur-Hadamard product. Therefore, \( {C\in\mathcal{S}_n^+} \). For the second part, since \( {C\in\mathcal{S}_n^+} \), we have \( {p_{\mathcal{S}_n(A)}(M)\in\mathcal{S}_n^+} \) for any \( {M\in\mathcal{S}_n^+} \) by virtue of the first part. Then, \( {p_{\mathcal{S}_n^+(A)}=p_{\mathcal{S}_n(A)}} \) on \( {\mathcal{S}_n^+} \), since if \( {\mathcal{A}} \) and \( {\mathcal{B}} \) are two non-empty closed convex subsets of a Hilbert space \( {\mathcal{H}} \) and if \( {x\in\mathcal{H}} \) is such that \( {p_\mathcal{A}(x)\in \mathcal{B}} \), then \( {p_{\mathcal{A}\cap\mathcal{B}}(x)=p_\mathcal{A}(x)} \). The third part is a consequence for instance of Oppenheim’s inequality:

\[ \det(U\circ V)\geq\det(U)\prod_{i=1}^n V_{i,i} \]

as soon as \( {U,V\in\mathcal{S}_n^+} \), cf. Horn and Johnson (th. 7.8.6 p. 480), used with \( {V=C} \) (notice that \( {C_{i,i}=1} \) for any \( {1\leq i\leq n} \). See also Horn and Johnson (th. 5.2.1 p. 309). ☐

The matrix \( {A} \) associated to the counter example mentioned above is given by

\[ A= \left( \begin{array}{rrr} 0 & 1 & 0 \\ 1 & 0 & 1 \\ 0 & 1 & 0 \\ \end{array} \right)\not\in\mathcal{S}_n^+. \]

More generally, when \( {A} \) is band diagonal, i.e. \( {A_{i,j}=0} \) if \( {|i-j|>1} \) for any \( {1\leq i,j\leq n} \), then \( {A} \) does not belong to \( {\mathcal{S}_n^+} \). These patterns correspond to chain graphs. In the same spirit, it is known that the spectrum of \( {A} \) ranges from \( {-r+1} \) to \( {r+1} \) when \( {G} \) is a bipartite \( {r} \)-regular graph, see for instance Bollobas (VIII.2). However, if \( {A+I_n} \) is block diagonal with diagonal blocks full of \( {1} \), then \( {A+I_n\in\mathcal{S}_n^+} \). According to corollay 2, \( {A+I_n\in\mathcal{S}_n^+} \) means that \( {G} \) contains any chord of any path. In particular, when \( {A=(1,\ldots,1)^\top(1,\ldots,1)-I_n\in\mathcal{S}_n^+} \), then \( {\mathcal{S}_n(A)=\mathcal{S}_n} \) (\( {G} \) is the complete graph). On the other hand, when \( {A=I_n\in\mathcal{S}_n^+} \), then \( {\mathcal{S}_n(A)} \) is the set of diagonal matrices (\( {G} \) is the empty graph).

Leave a Comment