Ce midi, à la pause café, un tonitruant collègue(*) m'a reproché de tenir un blog. Étonnant, non ? Un blog permet pourtant de manière très simple le partage du savoir et la diffusion des idées, dans des communautés petites ou grandes selon le sujet abordé. J'ai beaucoup appris en lisant des wiki et des blogs, au hasard des liens et des moteurs de recherche. Il semble que nous ayons affaire à deux catégories d'internautes : les consommateurs purs, et les producteurs consommateurs. La création n'a jamais été aussi simple. Qu'attendez-vous ? N'invoquez pas le manque de temps, car vous ne seriez pas ici à lire ce billet !

Une liste de blogs universitaires

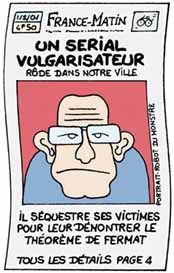

(*) Note datée du 11 avril : la version précédente de ce billet comportait le Prénom et la première lettre du Nom du collègue en question. C'était une erreur de ma part et je m'excuse d'avoir blessé ce collègue. Je viens de supprimer ces indications. Par ailleurs, le dessin de Matyo ci-dessus est une auto-dérision qui vise les auteurs de blogs de vulgarisation comme le mien, personne d'autre.

4 Comments