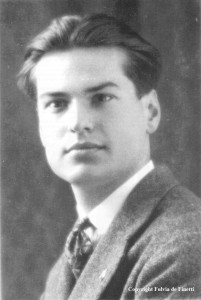

This post is devoted to some aspects of exchangeability, an important concept in probability theory, explored brilliantly by the mathematician Bruno de Finetti (1906 - 1985) who was a probabilist, a statistician, and an actuary, which is very pleasant for my teaching in Paris-Dauphine, a university centered around decision making.

The law \( {P_n} \) of a random vector \( {X=(X_1,\ldots,X_n)} \) on a product space \( {\mathbb{S}^n} \) is exchangeable when it is invariant by permutation of the coordinates:

\[ \forall f:\mathbb{S}\rightarrow\mathbb{R},\quad \forall\sigma\in\Sigma_n,\quad \int\!f\,dP_n=\int\!f_\sigma\,P_n \]

where \( {f_\sigma(x):=f(x_\sigma)} \) and \( {x_\sigma:=(x_{\sigma(1)},\ldots,x_{\sigma(n)})} \), and where \( {\Sigma_n} \) is the symmetric group formed by all bijective functions from \( {\{1,\ldots,n\}} \) to itself. It is customary to say that \( {(X_1,\ldots,X_n)} \) is a finite exchangeable (random) sequence.

Mixtures of product measures. Obviously, a sequence of iid random variables forms always an exchangeable vector. More generally, if \( {X} \) is a random vector of \( {\mathbb{S}^n} \) and if there exists a sigma-field \( {\mathcal{A}} \) such that the components \( {X_1,X_2,\ldots} \) of \( {X} \) are conditionally iid given \( {\mathcal{A}} \), then the law of \( {X} \) is exchangeable. The Hewitt-Savage theorem (see below) provides a converse.

In terms of distributions, any convex combination (mixture!) of product distributions is an exchangeable distribution. More precisely, if \( {\Pi_n} \) is a probability measure on the convex set of product probability measures on \( {\mathbb{S}^n} \), then the probability measure \( {P_n} \) on \( {\mathbb{S}^n} \) defined below is exchangeable:

\[ \int\!f\,dP_n =\int\!\left(\int\!f\,d\pi\right) \,d\Pi_n(\pi), \quad f:\mathbb{S}\rightarrow\mathbb{R}. \]

Conversely, can we approximate an exchangeable distribution \( {P_n} \) on \( {\mathbb{S}^n} \) by a mixture of product distributions? The answer is positive as expressed by the Diaconis-Freedman theorem below. Namely, we start by noting that for every test function \( {f:\mathbb{S}^n\rightarrow\mathbb{R}} \), the exchangeability of \( {P_n} \) gives

\[ \int\!f\,dP_n =\frac{1}{n!}\sum_{\sigma\in\Sigma_n}\int\!f_\sigma\,dP_n =\int\!\frac{1}{n!}\sum_{\sigma\in\Sigma_n}f_\sigma\,dP_n =\int\!\left(\int\!f\,d\mu\right)\,dP_n(x) \]

where \( {\mu} \) is the discrete probability measure given by

\[ \mu:=\frac{1}{n!}\sum_{\sigma\in\Sigma_n}\delta_{(x_{\sigma_1},\ldots,x_{\sigma_n})}. \]

Now, in order to approximate \( {\mu} \) by a product measure, we drop the bijective constraint which defines \( {\Sigma_n} \), and we replace \( {\frac{1}{n!}\sum_{\sigma\in\Sigma_n}} \) by \( {\frac{1}{n^n}\sum_{\sigma\in\Sigma_n^{\#}}} \) where \( {\Sigma_n^{\#}} \) is the set of all functions from \( {\{1,\ldots,n\}} \) to itself. This leads to approximate \( {\mu} \) by

\[ \mu^{\#}:=\frac{1}{n^n}\sum_{\sigma\in\Sigma_n^{\#}} \delta_{(x_{\sigma(1)},\ldots,x_{\sigma(n)})} =\left(\frac{1}{n}\sum_{k=1}^n\delta_{x_k}\right)^{\otimes n} =:\mu_n^{\otimes n} \]

and then approximate \( {P_n} \) by the mixture \( {P_n^{\#}} \) of product measures defined by

\[ \int\!f\,dP_n^{\#} :=\int\!\left(\int\!f\,d\mu^{\#}\right)\,dP_n =\int\!\left(\int\!f\,d\mu_n^{\otimes n}\right)\,dP_n. \]

If we denote by \( {R_n} \) the law of the empirical measure \( {\mu_n} \) under \( {P_n} \) then

\[ \int\!f\,dP_n^{\#} =\int\!\left(\int\!f\,d\rho^{\otimes n}\right)\,dR_n(\rho). \]

If we denote by \( {\Pi_n} \) the law of the product measure \( {\mu_n^{\otimes n}} \) under \( {P_n} \) then

\[ \int\!f\,dP_n^{\#} =\int\!\left(\int\!f\,d\pi\right)\,d\Pi_n(\pi). \]

Obviously if \( {\Pi_n} \) is a Dirac mass then \( {P_n} \) is a product measure.

The Diaconis-Freedman theorem states that \( {P_n^{\#}} \) is a good approximation of \( {P_n} \).

The Diaconis-Freedman theorem. Recall that the total variation distance between two probability measures \( {P} \) and \( {Q} \) is defined by

\[ \left\Vert P-Q\right\Vert_{\mathrm{TV}} :=\frac{1}{2}\sup_{\left\Vert f\right\Vert_\infty\leq1} \left(\int\!f\,dP-\int\!f\,dQ\right) =\sup_{A}(P(A)-Q(A))\in[0,1]. \]

Let \( {P_n} \) be an exchangeable law on \( {\mathbb{S}^n} \), and let \( {P_n^{\#}} \) be the mixture of product distributions constructed from \( {P_n} \) as above. For every \( {1\leq k\leq n} \), if \( {P_{n,k}} \) and \( {P_{n,k}^{\#}} \) denote the \( {k} \)-dimensional marginal distribution of \( {P_n} \) and \( {P_n^{\#}} \) respectively, then

\[ \left\Vert P_{n,k}-P_{n,k}^{\#}\right\Vert_{\mathrm{TV}} \leq\frac{k(k-1)}{n}. \]

This is known as the Diaconis-Freedman inequality or theorem.

- If \( {k>\sqrt{n}} \) then the right hand side exceeds \( {1} \) and the inequality says nothing;

- For \( {k=1} \) the right hand side vanishes and this corresponds to the fact that

\[ P_{n,1}=P_{n,1}^{\#}=\mathbb{E}_{P_n}(\mu_n) \]

where \( {\mu_n=\frac{1}{n}\sum_{k=1}^n\delta_{x_k}} \) since for every test function \( {f:\mathbb{S}\rightarrow\mathbb{R}} \),

\[ \int\!f\,dP_{n,1} =\frac{1}{n}\int\!\left(\sum_{k=1}^nf(x_k)\right)\,dP_n(x) =\int\!\left(\int\!f\,d\mu_n\right)\,dP_n; \]

For the two dimensional marginal, we have

\[ dP_{n,2}^{\#}(x_1,x_2) =\frac{n-1}{n}P_{n,2}(x_1,x_2) +\frac{1}{n}\mathbf{1}_{x_1=x_2}P_{n,1}(dx_1) \]

The \( {k} \)-dimensional marginal \( {P_{n,k}} \) satisfies similar formulas for every \( {k} \).

To prove the Diaconis-Freedman theorem (or inequality) we start from the formulas that we have already obtained, on a test function \( {f:\mathbb{S}^k\rightarrow\mathbb{R}} \), namely

\[ \int\!f\,dP_{n,k} =\int\!\left(\int\!f\,d\mu_k\right)\,dP_n, \quad\text{and}\quad \int\!f\,dP_{n,k}^{\#} =\int\!\left(\int\!f\,d\mu_k^{\#}\right)\,dP_n \]

where \( {\mu_k} \) and \( {\mu_k^{\#}} \) are the \( {k} \)-dimensional marginal distributions of

\[ \mu=\frac{1}{n!}\sum_{\sigma\in\Sigma_n}\delta_{x_\sigma} \quad\text{and}\quad \mu^{\#}=\frac{1}{n^n}\sum_{\sigma\in\Sigma_n^{\#}}\delta_{x_\sigma}. \]

Now

\[ \mu_k^{\#} =\frac{n(n-1)\cdots(n-k+1)}{n^k}\mu_k+\nu_k \]

where \( {\nu_k} \) is a positive measure, and therefore

\[ \mu_k-\mu_k^{\#}=\left(1-\frac{n(n-1)\cdots(n-k+1)}{n^k}\right)-\nu_k. \]

But since the total mass of \( {\nu_k} \) is given by

\[ \nu_k(\mathbb{S}^k)=1-\frac{n(n-1)\cdots(n-k+1)}{n^k}, \]

it follows then that

\[ \left\Vert\mu_k-\mu_k^{\#}\right\Vert_{\mathrm{TV}} \leq \frac{1}{2}\times2\left(1-\frac{n(n-1)\cdots(n-k+1)}{n^k}\right). \]

To achieve the proof the Diaconis-Freedman theorem, it remains to observe that

\[ \frac{n(n-1)\cdots(n-k+1)}{n^k} \geq\frac{(n-k+1)^k}{n^k} =\left(1-\frac{k-1}{n}\right)^k \geq1-\frac{k(k-1)}{n}. \]

The difference in nature between \( {\mu_k} \) and \( {\mu_k^{\#}} \) can be naturally understood in combinatorial terms. Namely \( {\mu_k} \) encodes the sampling of \( {k} \) balls from an urn containing \( {n} \) balls, without replacement, while \( {\mu_k^{\#}} \) does it with replacement.

When \( {\mathbb{S}} \) is finite, the upper bound can be improved as follows

\[ \left\Vert P_{n,k}-P_{n,k}^{\#}\right\Vert_{\mathrm{TV}} \leq\frac{k\min(C|\mathbb{S}|,k-1)}{n}. \]

The Hewitt-Savage theorem. Let \( {X=(X_1,X_2,\ldots)} \) be the vector of an infinite sequence of random variables on \( {\mathbb{S}} \). We say that \( {X} \) is exchangeable when its law \( {P} \) is invariant by all permutations of coordinates with finite support, meaning the set of bijective functions from \( {\mathbb{N}=\{1,2,\ldots\}} \) to itself such that all but a finite number of elements are fixed points. The law \( {P} \) is a law on the infinite product \( {\mathbb{S}^{\mathbb{N}}} \).

The Hewitt-Savage theorem states that if \( {P} \) is an exchangeable probability distribution on \( {\mathbb{S}^{\mathbb{N}}} \), then there exists a unique probability measure \( {R} \) on the set of probability measures on \( {\mathbb{S}} \), such that for every \( {n\in\mathbb{N}} \), the \( {n} \)-dimensional marginal \( {P_n} \) of \( {P} \) satisfies, for every test function \( {f:\mathbb{S}^n\rightarrow\mathbb{R}} \),

\[ \int\!f\,dP_n=\int\!\left(\int\!f\,d\rho^{\otimes n}\right)\,dR(\rho). \]

In terms of random variables, this means that there exists a sigma-field \( {\mathcal{A}} \) such that the components \( {X_1,X_2,\ldots} \) of \( {X} \) are conditionally iid given \( {\mathcal{A}} \). The Hewitt-Savage theorem can be proved by using the Diaconis-Freedman theorem: its suffices to show that the probability measure \( {R_n} \), which is the law of the empirical measure \( {\mu_n} \) under \( {P_n} \), converges weakly to some probability measure \( {R} \) on \( {\mathbb{S}} \) as \( {N\rightarrow\infty} \) (this can be done easly when \( {\mathbb{S}} \) is compact, otherwise one can use the method of moments).

The Hewitt-Savage theorem is false in general for finite sequences. This means that the law of certain finite dimensional exchangeable random vectors is not the mixture of product laws. This is fairly compatible with the fact that the Diaconis-Freedman theorem gives nothing for \( {k=n} \) (even if \( {\mathbb{S}} \) is finite!).

The Hewitt-Savage theorem for \( {S=\{0,1\}} \) is known as the de Finetti theorem. The de Finetti theorem can be stated as follows: if \( {(X_1,X_2,\ldots)} \) is an infinite random sequence of zeros and ones which is exchangeable then there exists a probability distribution \( {R} \) on \( {[0,1]} \) such that for every \( {n\geq1} \) and every \( {x_1,\ldots,x_n\in\{0,1\}} \),

\[ \mathbb{P}(X_1=x_1,\ldots,X_n=x_n) =\binom{n}{x_1+\cdots+x_n}\int\!p^{x_1+\cdots+x_n}(1-p)^{n-(x_1+\cdots+x_n)}\,dR(p). \]

A proof with martingales can be found for instance in the book of Achim Klenke (see below).

From exchangeability to asymptotic independence. Let \( {P_n} \) be an exchangeable probability measure on \( {\mathbb{S}^n} \) and suppose that under \( {P_n} \) the random empirical measure \( {\mu_n} \) tends weakly as \( {n\rightarrow\infty} \) to a non-random probability measure \( {\mu_\infty} \) on \( {\mathbb{S}} \):

\[ \mu_n\underset{n\rightarrow\infty}{\longrightarrow}\mu_\infty. \]

This is typically the case for the Coulomb gases that appear in unitary invariant random matrix ensembles for instance (in this case \( {\mathbb{S}} \) is typically \( {\mathbb{R}} \) or \( {\mathbb{C}} \) and \( {\mu_\infty} \) is continuous with a Lebesgue density). For every \( {k\leq n} \) we may write

\[ \left\Vert P_{n,k}-\mu_\infty^{\otimes k}\right\Vert_\mathrm{TV} \leq \left\Vert P_{n,k}-P_{n,k}^{\#}\right\Vert_\mathrm{TV} + \left\Vert P_{n,k}^{\#}-\mu_\infty^{\otimes k}\right\Vert_\mathrm{TV} \]

The first term in the right hand side can be controlled in turn using the Diaconis-Freedman theorem by \( {k(k-1)/n} \) which is meaningful when \( {k<\sqrt{n}} \). In order to control the second term in the right hand side, one may try to write

\[ \left\Vert P_{n,k}^{\#}-\mu_\infty^{\otimes k}\right\Vert_\mathrm{TV} \leq \mathbb{E}_{P_N}(\left\Vert \mu_n^{\otimes k}-\mu_\infty^{\otimes k}\right\Vert_\mathrm{TV}) \leq k\mathbb{E}_{P_N}(\left\Vert \mu_n-\mu_\infty\right\Vert_\mathrm{TV}). \]

Unfortunately the right hand side is equal to \( {1} \) when \( {\mu_\infty} \) is continuous since \( {\mu_n} \) is discrete. This is due to the total variation. A way to circumvent this problem is to replace the total variation distance by say a Wasserstein distance. Actually one can pass from one to another up to some loss, provided regularity or moments, and this was extensively used by Maxime Hauray and Stéphane Mischler for instance (see the reference below). One may also try to replace \( {\mathbb{E}_{P_N}\mathrm{dist}(\mu_n,\mu_\infty)} \) by \( {\mathrm{dist}(\mathbb{E}_{P_N}\mu_n,\mu_\infty)=\mathrm{dist}(P_{n,1},\mu_\infty)} \).

The weak convergence of random empirical measures of exchangeable particles when the number of particles tends to infinity is known to be equivalent to the fact that two particles taken randomly become asymptotically independent. This phenomenon is known as Tanaka's lemma in certain communities (see the course of Alain-Sol Sznitman and the survey by Sylvie Méléard below).

Example. My favorite example of finite exchangeable sequence is given by the standard Dirichlet distribution. Namely \( {X} \) is uniformly distributed on the \( {\ell_n^1} \) sphere

\[ \{(x_1,\ldots,x_n)\in\mathbb{R}_+^n:x_1+\cdots+x_n=1\}. \]

One can realize \( {X} \) as follows:

\[ (X_1,\ldots,X_n)=\frac{(Z_1,\ldots,Z_n)}{Z_1+\cdots+Z_n} \]

where \( {Z_1,\ldots,Z_n} \) are iid with standard exponential law \( {\mathcal{E}} \) of density \( {x\mapsto e^{-x}\mathbf{1}_{\mathbb{R} +}(x)} \). Thanks to the law of large numbers \( {X_1+\cdots+X_n=n(1+o_{n\rightarrow\infty}(1))} \) almost surely, and therefore for every fixed \( {k\geq1} \) the random vector \( {n(X_1,\ldots,X_k)} \) tends in law as \( {n\rightarrow\infty} \) to the product probability measure \( {\mathcal{E}^{\otimes k}} \). If \( {P_n} \) denotes the law of \( {X} \) then the law of \( {n(X_1,\ldots,X_k)} \) is a dilation of the marginal distribution \( {P_{n,k}} \). This is a remarkable asymptotic independence property! There is an analogue for \( {\ell_n^p} \) spheres, \( {p\geq1} \), and in particular for \( {p=2} \) the exponential is replaced by the Gaussian.

Other striking examples of finite exchangeability are provided by the Ginibre Coulomb gas, and by the standard Pólya urn, but let us say that it is another story!

Further reading. The literature on exchangeability is huge and crosses several parts of mathematics (see the references below). The term ``de Finetti theorem'' is used for various statements connecting exchangeability with mixtures of product distributions.

- Bruno de Finetti, Funzione caratteristica di un fenomeno aleatorio (1931);

- Bruno de Finetti, La prévision, ses lois logiques, ses sources subjectives (1937);

- Edwin Hewitt and Leonard Savage, Symmetric measures on Cartesian products (1955);

- Persi Diaconis and David Freedman, Finite exchangeable sequences (1980);

- David Aldous, Exchangeability and related topics (1985, Saint-Flour 1983);

- Hiroshi Tanaka, Limit theorems for certain diffusion processes with interaction (1984, Taniguchi Symposium 1982);

- Alain-Sol Sznitman, Topics in propagation of chaos (1991, Saint-Flour 1989);

- Sylvie Méléard, Asymptotic behaviour of some interacting particle systems; McKean-Vlasov and Boltzmann models (1996);

- Robert Phelps, Lectures on Choquet's theorem (2001);

- Olav Kallenberg, Probabilistic symmetries and invariance principles (2005);

- Maxime Hauray and Stéphane Mischler, On Kac's chaos and related problems (2014);

- Achim Klenke, Probability theory. A comprehensive course. (2014);

- Nicolas Rougerie, de Finetti theorems, mean-field limits and Bose-Einstein condensation (2015).

Note. This post benefited from discussions with François Bolley and Joaquin Fontbona.

1 Comment