I had the pleasure to upload recently on arXiv and on HAL a collaborative work with Charles Bordenave and Pietro Caputo, entitled Spectrum of Markov generators on sparse random graphs.

Let \( {X=(X_{ij})_{1\leq i,j\leq n}} \) be a random matrix in \( {\mathbb{C}} \) whose entries are i.i.d. with mean \( {m} \), covariance matrix \( {K=\mathrm{Cov}(\Re X_{11},\Im X_{11})} \), and variance \( {\mathrm{Tr}(K)=1} \). The sparse regime is obtained by allowing the law of \( {X_{11}} \) to depend on \( {n} \) with \( {\mathrm{Tr}(K)\rightarrow0} \) as \( {n\rightarrow\infty} \), the basic example being the adjacency matrix of Erdös-Rényi random graphs. The analysis of the sparse regime was promoted by Charles Bordenave, who has a pretty Hungarian mathematical soul. However, to simplify the exposition, this blog post restricts to the non sparse regime, which captures most of the rigid algebraic-geometric structure: we thus assume that the law of \( {X_{11}} \) does not depend on \( {n} \). We consider the random matrix defined by

\[ L=X-D \]

where \( {D} \) is the diagonal matrix obtained from the row sums of \( {X} \), namely \( {D_{ii}=\sum_{k=1}^nX_{ik}} \). If \( {X} \) is interpreted as the adjacency matrix of a weighted oriented graph, then \( {L} \) is the associated Laplacian matrix, with zero row sums. In particular, if the weights \( {X_{ij}} \) take values in \( {[0,\infty)} \), then \( {L} \) is the infinitesimal generator of the continuous time random walk on that graph, and properties of the spectrum of \( {L} \) can be used to study its long-time behavior. Clearly, \( {L} \) has non independent entries but independent rows. A related model is obtained by considering the stochastic matrix \( {P=D^{-1}X} \), which corresponds to discrete time random walk (considered in arXiv:0808.1502). In order to analyze the spectrum of \( {L} \), it is more convenient to introduce the following affine transformation of \( {L} \):

\[ M =\frac{L+nmI}{\sqrt{n}} =\frac{X}{\sqrt{n}}-\frac{D-nmI}{\sqrt{n}}. \]

By the central limit theorem, the distribution of \( {n^{-1/2}(D_{ii}-nm)} \) converges to the Gaussian law \( {\mathcal{N}(0,K)} \). Combined with the circular law for \( {n^{-1/2}X} \), this suggests the interpretation of the spectral distribution of \( {M} \), in the limit \( {n\rightarrow\infty} \), as an additive Gaussian deformation of the circular law. In a sense, our model is a non-Hermitian analogue of a model already studied by Bryc, Dembo, and Jiang years ago with the method of moments.

Basic notations and concepts. Recall that if \( {A} \) is an \( {n\times n} \) matrix, we denote by \( {\lambda_1(A),\ldots,\lambda_n(A)} \) its eigenvalues, i.e. the roots in \( {\mathbb{C}} \) of its characteristic polynomial. We label them in such a way that \( {|\lambda_1(A)|\geq\cdots\geq|\lambda_n(A)|} \). We denote by \( {s_1(A),\ldots,s_n(A)} \) the singular values of \( {A} \), i.e. the eigenvalues of the Hermitian positive semidefinite matrix \( {| A |= \sqrt{A^*A}} \), labeled so that \( {s_1(A)\geq\cdots \geq s_n(A) \geq 0} \). The operator norm of \( {A} \) is \( {\| A \|= s_1(A)} \) while the spectral radius is \( {|\lambda_1(A)|} \). We define the discrete probability measures

\[ \mu_A=\frac{1}{n}\sum_{k=1}^n\delta_{\lambda_k(A)} \quad\text{and}\quad \nu_A=\mu_{|A|} =\frac{1}{n}\sum_{k=1}^n\delta_{s_k(A)}. \]

In the sequel, \( {G} \) is a Gaussian random variable on \( {\mathbb{R}^2\cong\mathbb{C}} \) with law \( {\mathcal{N}(0,K)} \) i.e. mean \( {0} \) and covariance matrix \( {K} \). This law has a Lebesgue density on \( {\mathbb{R}^2} \) if and only if \( {K} \) is invertible, given by \( {z=(x,y)\mapsto(2\pi\sqrt{\det(K)})^{-1}\exp(-\frac{1}{2}<(x,y)^{\top}K^{-1}(x,y)>)} \). Note that \( {K} \) is not invertible when \( {X_{11}} \) is supported in \( {\mathbb{R}} \).

Singular values of shifts. Our first result concerns the singular values of shifts of the matrix \( {M} \), a useful proxy to the eigenvalues. It states that for every \( {z\in\mathbb{C}} \), there exists a probability measure \( {\nu_z} \) on \( {\mathbb{R}_+} \) which depends only on \( {z} \) and \( {K} \) such that with probability one,

\[ \nu_{M-zI} \underset{n\rightarrow\infty}{\longrightarrow} \nu_z. \]

Moreover, the limiting law \( {\nu_z} \) is characterized as follows: its symmetrization \( {\check \nu_z} \) is the unique symmetric probability measure on \( {\mathbb{R}} \) with Cauchy-Stieltjes transform satisfying, for every \( {\eta\in\mathbb{C}_+=\{z\in\mathbb{C}:\Im(z)>0\}} \),

\[ S_{\check \nu_z}(\eta) =\int_{\mathbb{C}}\!\frac{1}{z-\eta}\,d\check\nu(z) =\mathbb{E}\left(\frac{S_{\check \nu_z}(\eta)+\eta } {|G-z|^2-(\eta+ S_{\check \nu_z}(\eta))^2}\right). \]

It is in fact classical to express the Cauchy-Stieltjes transform of the limiting singular values distribution as a fixed point of a non linear equation, which comes from a recursion on the trace of the resolvent exploiting the recursive structure of the model. The real difficulty in the proof of the result above lies in the fact the entries of \( {L} \) are dependent (but asymptotically independent).

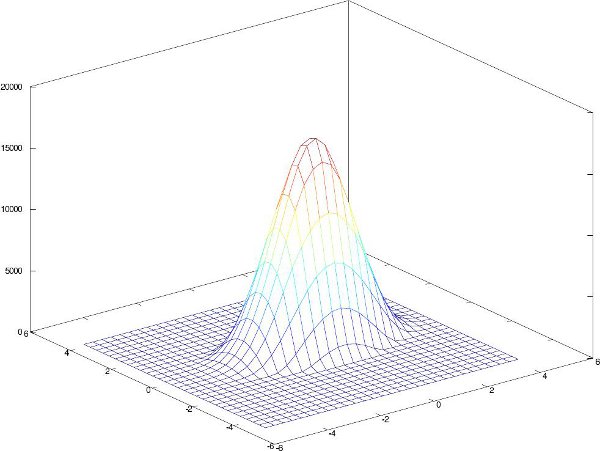

Eigenvalues convergence. The next result concerns the eigenvalues of \( {M} \):

\[ \mu_{M} \underset{n\rightarrow\infty}{\longrightarrow} \mu \]

where \( {\mu} \) is the probability measure on \( {\mathbb{C}} \) defined by

\[ \mu=\frac{1}{2\pi}\Delta\int_0^\infty\!\log(t)\,d\nu_z(t), \]

where the Laplacian \( {\Delta=\partial_z\partial_{\overline z}=\partial_x^2+\partial_y^2} \) is taken in the sense of Schwartz-Sobolev distributions in the space \( {\mathcal{D}'(\mathbb{R}^2)} \). The limiting distribution \( {\mu} \) is independent of the mean \( {m} \) of \( {X_{11}} \), and this is rather natural since shifting the entries produces a deterministic rank one perturbation. As in other known circumstances, a rank one additive perturbation produces essentially a single outlier, and therefore does not affect the limiting spectral distribution. Our proof of the convergence to \( {\mu} \) is inspired from the logarithmic potential approach developed by Tao and Vu for the standard circular law (see also arXiv:1109.3343). As usual, the main difficulty lies in the control of the small singular values of shifts \( {M-zI} \), in particular the norm of the resolvent. We solve this difficulty by using essentially the techniques developed by Rudelson and Vershynin and others.

Rigid analysis of the limit. To obtain further properties of \( {\mu} \), we turn to a flavor of free probability extended to possibly unbounded operators to interpret \( {n^{-1/2}(D-nmI)} \) as \( {n\rightarrow\infty} \). Following Brown and Haagerup and Schultz, one can define a large operator \( {\star} \)-algebra in which each element \( {a} \) has a Brown spectral measure denoted \( {\mu_a} \), that is the probability measure on \( {\mathbb{C}} \) given by

\[ \mu_a=\Delta\int_0^\infty\!\log(s)\,d\nu_{|a-z|}(s) \]

where \( {|b|=\sqrt{bb^*}} \) (i.e. the square root of the self-adjoint operator \( {bb^*} \)). If \( {a} \) is normal (i.e. \( {aa^*=a^*a} \)) then its Brown measure \( {\mu_a} \) coincides with its usual spectral measure. Now let \( {c} \) and \( {g} \) be \( {\star} \)-free operators with \( {c} \) circular, and \( {g} \) normal (i.e. \( {gg^*=g^*g} \)) with spectral measure equal to the Gaussian law \( {\mathcal{N}(0,K)} \). Then we are able to show that

\[ \nu_z = \mu_{|c + g – z|} \quad \text{and} \quad \mu = \mu_{c+g}. \]

Having identified the limit law \( {\mu} \), we obtain some additional information on it. Namely, we use the concept of subordination developed by Biane and Voiculescu, which allows to show that the support of \( {\mu} \) is given by

\[ \mathrm{Supp}(\mu) = \left\{z \in \mathbb{C} : \mathbb{E}\left(\frac{1}{|G-z|^2}\right)\geq 1\right\}. \]

Moreover, there exists a unique function \( {f : \mathrm{Supp} (\mu) \rightarrow [0,1]} \), which is \( {C^\infty} \) in the interior of \( {\mathrm{Supp} (\mu)} \), such that for all \( {z\in \mathrm{Supp} (\mu)} \),

\[ \mathbb{E}\left[\frac{1}{|G-z|^2 + f(z)^2}\right]=1. \]

Moreover \( {\mu} \) is absolutely continuous in \( {\mathbb{C}} \) with density given by

\[ z\mapsto \frac{1}{\pi} f(z)^2 \mathbb{E}\left[\Phi(G,z)\right] + \frac{1}{\pi} \frac{\left[\mathbb{E}\left[(G-z)\Phi(G,z)\right]\right|^2}{\mathbb{E}\left[\Phi(G,z)\right]} \]

where

\[ \Phi(w,z):=\frac{1}{(|w-z|^2 + f(z)^2)^2}. \]

It can be seen that \( {\mu} \) is rotationally invariant when \( {K} \) is a multiple of the identity, while this is not the case if \( {X_{11}} \) is supported in \( {\mathbb{R}} \), in which case \( {K_{22}=K_{12}=K_{21}=0} \) (in this case \( {G} \) does not have a density on \( {\mathbb{C}} \) since \( {K} \) is not invertible). Note also that the support of \( {\mu} \) is unbounded since it contains the support of \( {\mathcal{N}(0,K)} \), and thus \( {\mathrm{Supp}(\mu)=\mathbb{C}} \) if \( {K} \) is invertible. If \( {K} \) is not invertible, it can be checked that the boundary of \( {\mathrm{Supp}(\mu)} \) is

\[ \left\{z \in \mathbb{C} : \mathbb{E}\left(\frac{1}{|G-z|^2}\right) = 1\right\} \]

On this set, \( {f(z) = 0} \), but the formula for the density above shows that the density does not vanish there. This phenomenon, not unusual for Brown measures, occurs for the circular law and more generally for \( {R} \)-diagonal operators, see Haagerup and Larsen. Our formula above for the density is slightly more explicit than the formulas given in Biane and Lehner. The subordination formula that we use can also be used to compute more general Brown measures of the form \( {\mu_{a + c}} \) with \( {a, c} \) \( {\star} \)-free and \( {c} \) circular.

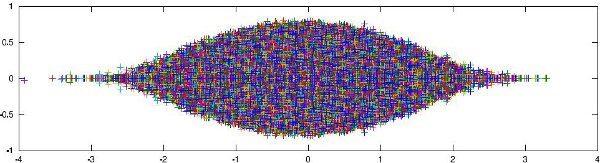

Spectrum localization. The convergence of \( {\mu_M} \) suggests that the bulk of the spectrum of \( {L} \) is concentrated around the value \( {-mn} \) in a two dimensional window of width \( {\sqrt{n}} \). Actually, it is possible to localize more precisely the support of the spectrum, by controlling the extremal eigenvalues of \( {L} \). Recall that \( {L} \) has always the trivial eigenvalue \( {0} \). We define for convenience the centered matrices \( {\underline X = X-\mathbb{E}X} \) and \( {\underline D=D-\mathbb{E}D} \) and \( {\underline L=L-\mathbb{E}L=\underline X-\underline D} \). If \( {J} \) stands for the \( {n\times n} \) matrix with all entries equal to \( {1} \), then

\[ \mathbb{E}L = L-\underline L= mJ – mnI. \]

Now the idea is that if \( {\mathbb{E}(|X_{11}^4|)<\infty} \) then by Bai and Yin theorem, the operator norm of \( {\underline X} \) is \( {\sqrt{n}\,(2+o(1))} \). On the other hand, from the central limit theorem one expects that the operator norm and the spectral radius of the diagonal matrix \( {\underline D} \) are of order \( {\sqrt{2n\log(n)}\,(1+o(1))} \) as for maximum of i.i.d. Gaussian random variables. We show indeed that if \( {X_{11}} \) is supported in \( {\mathbb{R}_+} \) and if \( {\mathbb{E}(|X_{11}|^4)<\infty} \) then with probability one, for \( {n \gg1} \), every eigenvalue \( { \underline \lambda} \) of \( {\underline L} \) satisfies

\[ |\Re \underline \lambda| \leq \sqrt{2 n\log(n)}\,(1+o(1)) \quad\text{and}\quad |\Im \underline \lambda| \leq \sqrt{n}(2+o(1)) . \]

Moreover, with probability one, for \( {n \gg 1} \), every eigenvalue \( {\lambda\neq 0} \) of \( {L} \) satisfies

\[ |\Re \lambda + m n| \leq \sqrt{2 n \log(n)}\,(1+o(1)) \quad\text{and}\quad |\Im \lambda| \leq \sqrt{n}(2+o(1)). \]

Our proof is simple and relies on classical perturbative methods in matrix analysis: refined Gershgorin and the Bauer-Fike theorems. If one defines a spectral gap \( {\kappa} \) of the Markov generator \( {L} \) as the minimum of \( {|\Re \lambda|} \) for \( {\lambda\neq 0} \) in the spectrum of \( {L} \), then it follows that a.s.

\[ \kappa\geq mn – \sqrt{2n\log(n)}\,(1+o(1)). \]

Invariant measure. We turn to the properties of the invariant measure of \( {L} \). If \( {X_{11}} \) is supported in \( {\mathbb{R}_+} \) and if \( {L} \) is irreducible, then from the Perron-Frobenius theorem, the kernel of \( {L} \) has dimension \( {1} \) and there is a unique vector \( {\Pi\in(0,1)^n} \) such that \( {L^\top\Pi = 0} \) and \( {\sum_{i=1}^n\Pi_i =1} \). The vector \( {\Pi} \) is the invariant measure of the Markov process with infinitesimal generator \( {L} \). Actually, we show that a.s. for \( {n \gg 1} \), the Markov generator \( {L} \) is irreducible and

\[ \left\Vert\Pi – U_n\right\Vert_1 = \mathcal{O} \left(\sqrt{\frac{\log(n)}{n}}\right)=o(1). \]

where \( {U_n = \frac1n(1 ,\ldots,1)^\top} \) is the uniform probability distribution on the finite set \( {\{1, \ldots , n\}} \) and where \( {\left\Vert\cdot\right\Vert_1} \) is the total variation norm. Our proof relies on the remarkable Sylvester determinant theorem which states that if \( {A\in\mathcal{M}_{p,q}} \) and \( {B\in\mathcal{M}_{q,p}} \) are two rectangular matrices with swapped dimensions then

\[ \det(I_p+AB)=\det(I_q+BA). \]

To understand it, recall that \( {AB} \) and \( {BA} \) have the same spectrum up to the multiplicity of the eigenvalue zero, and thus, their characteristic polynomials in \( {z} \) are identical up to a multiplication by a power of \( {z} \), which gives the result taking \( {z=1} \). In particular, this formula allows to pass from a high dimensional problem to a one dimensional problem.

Interpolation. Our results for the matrix \( {L=X-D} \) can be extended with minor modifications to the case of the matrix \( {L_{(t)}=X-tD} \), where \( {t\in\mathbb{R}} \) provided the law \( {\mathcal{N}(0,K)} \) characterizing our limiting spectral distributions is replaced by \( {\mathcal{N}(0,t^2K)} \). This gives back the circular law for \( {t=0} \). One may also interpolate between the Gaussian and the circular laws by considering \( {(1-t)X-tD} \) with \( {t\in[0,1]} \) or other parametrizations.

Open problems.

- Almost sure convergence. The mode of convergence of spectral distributions is the weak convergence in probability. We believe that this can be upgraded to almost sure weak convergence, but this requires stronger bounds on the smallest singular values of \( {M-zI} \) (i.e. norm of the resolvent). This is not a problem if \( {X_{11}} \) has a bounded density.

- Sparsity. We are able to show that the results remain essentially available in the sparse case in which the law of \( {X_{11}} \) depends on \( {n} \). However, our treatment is not optimal. Note that an optimal answer in the sparse case is still pending even for the circular law model.

- Heavy tails. A different model for random Markov generators is obtained when the law of \( {X_{11}} \) has heavy tails, with e.g. infinite first moment. In this context, we refer to arXiv:1006.1713 and to arXiv:1109.3343 for the spectral analysis of non-Hermitian matrices with i.i.d. entries, and to arXiv:0903.3528 for the case of reversible Markov transition matrices. It is natural to expect that, in contrast with the cases considered here, there is no asymptotic independence of the matrices \( {X} \) and \( {D} \) in the heavy tailed case.

- Spectral edge and spectral gap. Concerning the localization of the spectrum, it seems natural to conjecture the asymptotic behavior \( {\kappa= mn – \sqrt{2n\log(n)}\,(1+o(1))} \) for the spectral gap, but we do not have a proof of the corresponding upper bound. In the same spirit, we believe that with probability one, with \( {\underline L=L-\mathbb{E}L} \),

\[ \lim_{n\rightarrow\infty} \frac{s_1(\underline L)}{\sqrt{2 n\log(n)}} =\lim_{n\rightarrow\infty}\frac{|\lambda_1(\underline L)|}{\sqrt{2 n\log(n)}}=1, \]

which contrasts with the behavior of \( {\underline X} \) for which \( {s_1/|\lambda_1|\rightarrow2} \) as \( {n\rightarrow\infty} \).