The Cauchy-Stieltjes transform of a probability measure $\mu$ on $\mathbb{R}$ is

\[

s_\mu(z):=\int\frac{\mu(\mathrm{d}x)}{z-x},\quad

z\in\mathbb{C}_+:=\{z\in\mathbb{C}:\Im z>0\}.

\] Since $z\in\mathbb{C}_+$, the map $x\in\mathbb{R}\mapsto1/(z-x)$ is continuous, and uniformly bounded, namely

\[

\frac{1}{|z-x|}

=\frac{1}{\sqrt{(x-\Re z)^2+(\Im z)^2}}

\leq\frac{1}{\Im z}

\quad\text{hence}\quad

|s_\mu(z)|\leq\frac{1}{\Im z}

\quad\text{(uniform in $\mu$)}.

\] This transform of measure is particularly useful for the spectral analysis of matrices. Namely, if $A$ is an $n\times n$ Hermitian matrix with eigenvalues $\lambda_1\geq\cdots\geq\lambda_n$, and if

\[

\mu_A:=\frac{1}{n}\sum_{k=1}^n\delta_{\lambda_k}

\] is its empirical spectral distribution, then for all $z\in\mathbb{C}_+$, denoting

\[

R_A(z):=(A-zI_n)^{-1}

\] the resolvent of $A$ at point $z$, we have

\[

s_{\mu_A}(z)=\frac{1}{n}\sum_{k=1}^n\frac{1}{z-\lambda_k}=-\frac{1}{n}\mathrm{Tr}(R_A(z)).

\] In random matrix theory, the transform $s_{\mu_A}$ of $\mu_A$ makes a link between the spectral variable of interest $\mu_A$ and the matrix variable $A$ that carries the assumptions. It consists in testing the empirical spectral measure $\mu_A$ on the family of test functions $\{1/(z-x):z\in\mathbb{C}_+\}$, which differs from the moments method which corresponds to the family of test functions $\{x^r:r\in\mathbb{N}\}$. A link between $s_\mu$ and the moments of $\mu$ is as follows : for all $z\in\mathbb{C}_+$

\[

s_\mu(z)

=z^{-1}\int\frac{\mu(\mathrm{d}x)}{1-\frac{x}{z}}

=z^{-1}\int\sum_{r=0}^\infty\Bigr(\frac{x}{z}\Bigr)^r\mu(\mathrm{d}x)

=\sum_{r=0}^\infty z^{-(r+1)}\int x^r\mu(\mathrm{d}x)

\] provided that $|z|>\sup\{|x|:x\in\mathrm{supp}(\mu)\}$, which is always possible when $\mu$ has compact support (otherwise it may not be characterized by its moments). In other words the moments of $\mu$ are essentially the coefficients of the series expansion of $s_\mu$ at $\infty$. Note also that if $\mu$ is symmetric and compactly supported on $\mathbb{R}$ then, by the Cauchy-Hadamard formula for the radius of convergence,

\[

\max\mathrm{supp}(\mu)=\limsup_{r\to+\infty}\Bigr(\int x^r\mu(\mathrm{d}x)\Bigr)^{\frac{1}{r}}.

\]

Characterization of measure. The Cauchy-Stieltjes transform characterizes the measure, just like the Fourier transform (characteristic function) : for all probability measures $\mu$ and $\nu$ on $\mathbb{R}$,

\[

\mu=\nu\quad\text{if and only if}\quad s_\mu=s_\nu.

\]

Proof. For all $z\in\mathbb{C}_+$, the quantity $\frac{1}{\pi}\Im s_\mu(z)$ is the density at point $\Re z$ of the random variable $X+(\Im z)Z$ where $X\sim\mu$ and $Z\sim\mathrm{Cauchy}(0,1)$ are independent :

\[

\frac{1}{\pi}\Im s_\mu(z)

=\frac{1}{\pi}\int\frac{\Im z}{(\lambda-\Re z)^2+(\Im z)^2}\mu(\mathrm{d}\lambda)

=(\kappa*\mu)(\Re z)

\] where

\[

\kappa(x):=\frac{\Im z}{\pi(x^2+(\Im z)^2)}

\] and since $\varphi_{Z}(t)=\mathrm{e}^{-|t|}\neq0$ for all $t\in\mathbb{R}$, it follows that if $s_\mu=s_\nu$ then $\mu=\nu$. We can see the density $\frac{1}{\pi}\Im s_\mu$ as a regularization of $\mu$ since by dominated convergence

\[

X+(\Im z)Z \xrightarrow[\Im z\to0]{\mathrm{d}}\mu.

\] Note also that $s_\mu$ is analytic on $\mathbb{C}_+$, thus if $s_\mu=s_\nu$ on an arbitrarily small open subset of $\mathbb{C}_+$, then the uniqueness of the analytic continuation gives $s_\mu=s_\nu$ on all $\mathbb{C}_+$, hence $\mu=\nu$.

Characterization of weak convergence. Let ${(\mu_n)}_{n\geq1}$ be a tight sequence of probability measures on $\mathbb{R}$. The limit $\lim_{n\to\infty}s_{\mu_n}(z)=s(z)$ exists for all $z\in\mathbb{C}_+$ if and only if there exists a probability measure $\mu$ on $\mathbb{R}$ such that $s_\mu(z)=s(z)$ for all $z\in\mathbb{C}_+$ and

\[

\lim_{n\to\infty}\int f\mathrm{d}\mu_n=\int f\mathrm{d}\mu\quad\text{for all }f\in\mathcal{C}_b.

\] If ${(\mu_n)}_{n\geq1}$ is a sequence of probability measures on $\mathbb{R}$ such that $\lim_{n\to\infty}s_{\mu_n}(z)=s(z)$ exists for all $z\in\mathbb{C}_+$ then there exists a sub-probability measure $\mu$ such that $s_\mu(z)=s(z)$ for all $z\in\mathbb{C}_+$, and $\mu_n\to\mu$ weakly for $\mathcal{C}_0$ test functions, and $\mu$ is a probability measure iff $$\lim_{y\to+\infty}\mathrm{i}ys(\mathrm{i}y)=1,$$ and in this case the convergence holds for all test functions in $\mathcal{C}_b$.

Proof. If $\int f\mathrm{d}\mu_n\to\int f\mathrm{d}\mu$ for all $f\in\mathcal{C}_b$ then $s_{\mu_n}(z)\to s_{\mu}(z)$ for all $z\in\mathbb{C}_+$ by taking $f(x)=\frac{1}{z-x}$. Conversely, suppose that ${(\mu_n)}_{n\geq1}$ is such that $s(z):=\lim_{n\to\infty}s_{\mu_n}(z)$ exists for all $z\in\mathbb{C}_+$. If ${(\mu_{n_k})}_{k\geq1}$ is a narrowly converging sub-sequence to some probability measure $\mu$, then by the first part, $s_{\mu_{n_k}}\to s_\mu$ pointwise, and thus $s_\mu=s$, hence the uniqueness of the limit, and the narrow convergence since by Prokhorov's theorem on tight families, the sequence is relatively sequentially compact for the topology of the narrow convergence.

We could avoid the Prokhorov theorem. Namely, tightness allows to assume without loss of generality that it is supported in a fixed compact subset $K\subset\mathbb{R}$. Let $Z\sim\mathrm{Cauchy}(0,1)$ and $X_n\sim\mu_n$ be independent. Then for all $z:=x+\mathrm{i}y\in\mathbb{C}_+$ and all $t\in\mathbb{R}$, by independence,

\[

\varphi_{X_n+yZ}(t)

:=\mathbb{E}(\mathrm{e}^{\mathrm{i}t(X_n+yZ)})

=\varphi_{X_n}(t)\mathrm{e}^{-y|t|}

\] while by using the previous proof, by dominated convergence ($K$ is compact),

\[

\varphi_{X_n+yZ}(t)

=\int\frac{1}{\pi}\Im

s_{\mu_n}(x+\mathrm{i}y)\mathrm{e}^{\mathrm{i}tx}\mathrm{d}x

\xrightarrow[n\to\infty]{}

\int\frac{1}{\pi}\Im s(x+\mathrm{i}y)\mathrm{e}^{\mathrm{i}tx}\mathrm{d}x.

\] Note that $|\Im s(x+\mathrm{i}y)|\leq|s(z)|=|\lim_{n\to\infty}s_{\mu_n}(z)|\leq 1/\Im z=1/y$. Therefore, for all $t\in\mathbb{R}$,

\[

\varphi_{X_n}(t)

\xrightarrow[n\to\infty]{}

\mathrm{e}^{y|t|}\int\frac{1}{\pi}\Im s(x+\mathrm{i}y)\mathrm{e}^{\mathrm{i}tx}\mathrm{d}x,

\] and the limit is continuous at $t=0$ by dominated convergence. Hence, by the Paul Lévy continuity theorem, there exists a probability measure $\mu$ such that $\lim_{n\to\infty}\int f\mathrm{d}\mu_n=\int f\mathrm{d}\mu$ for all $f\in\mathcal{C}_b$. In particular, for $f(x)=\frac{1}{z-x}$, $z\in\mathbb{C}_+$, we get $s_{\mu_n}(z)\to s_\mu(z)$ for all $z\in\mathbb{C}_+$, and by uniqueness of the limit, $s_\mu(z)=s(z)$, for all $z\in\mathbb{C}_+$.

Finally, without tightness, if ${(\mu_n)}_{n\geq1}$ is a sequence of probability measures such that $s_{\mu_n}\to s$ pointwise for a function $s$, then by Helly's selection theorem, we get a vaguely converging sub-sequence $\mu_{n_k}\to\mu$ to some sub-probability measure $\mu$, the first part gives $s_\mu=s$ hence the uniqueness of the vague limit, and the relative sequential compactness of sub-probabilities for the topology of vague convergence gives $\mu_n\to\mu$ vaguely. This approach based on the Helly selection can be used in the tight case.

Moreover the asymptotic expansion of $s_\mu(z)$ at $z\to\infty$ in terms of the moments, given earlier in this post, is still valid for a sub-probability measure, and gives in particular $zs_\mu(z)\sim\mu(\mathbb{R})$ as $z\to\infty$. In particular $\mu$ is a probability measure iff $\lim_{y\to+\infty}\mathrm{i}ys_\mu(\mathrm{i}y)=1$.

Cauchy-Stieltjes transform of semi-circle distribution. The semi-circle distribution

\[

\mu^{\mathrm{SC}}(\mathrm{d}x):=\frac{\sqrt{4-x^2}}{2\pi}\mathbb{1}_{x\in[-2,2]}\mathrm{d}x

\] satisfies

\[

s_{\mu^{\mathrm{SC}}}(z)=\frac{z-\sqrt{z^2-4}}{2}\quad\text{for all }z\in\mathbb{C}_+

\] where we take the $\sqrt{\cdot}$ with non-negative imaginary part (or real part if imaginary part is zero).

Proof. We could use the residue method. Alternatively, we can use the moments. The odd ones of $\mu^{\mathrm{SC}}$ are zero while the even ones are the Catalan numbers

\[

C_n:=\frac{1}{n+1}\binom{2n}{n}C_n=\int x^{2n}\mu^{\mathrm{SC}}(\mathrm{d}x).

\] For all $z\in\mathbb{C}_+$ with $|z|>2$,

\[

s_{\mu^{\mathrm{DC}}}(z)

=\sum_{n=0}^\infty z^{-(n+1)}\int x^n\mu^{\mathrm{DC}}(\mathrm{d}x)

=\sum_{n=0}^\infty z^{-(2n+1)}\frac{\binom{2n}{n}}{n+1}

=\frac{1}{z}G\Bigr(\frac{1}{z^2}\Bigr)

\] where $G(w):=\sum_{n=0}^\infty\frac{\binom{2n}{n}}{n+1}w^n=\sum_{n=0}^\infty C_nw^n$ is the generating function of the Catalan numbers. These numbers satisfy the recursive relation (known as Segner formula)

\[

C_0=1\quad\text{et}\quad

C_{n+1}=\sum_{k=1}^nC_kC_{n-k},\quad n\geq0.

\] This relation translates into the quadratic functional equation

\[

G(w)=1+wG(w)^2.

\] This equation has two solutions $\frac{1\pm\sqrt{1-4w}}{2w}$, which gives

\[

G(w)=\frac{1-\sqrt{1-4w}}{2w}

\] since the other solution does not take the value $1$ when $w\to0$. Finally we get

\[

s_{\mu^{\mathrm{SC}}}(z)=\frac{1}{z}G\Bigr(\frac{1}{z^2}\Bigr),\quad\text{with }G(w)=\frac{1-\sqrt{1-4w}}{2w},

\] for $z$ in a neighborhood of $\infty$, and this formula remains valid on the whole $\mathbb{C}_+$ by analytic continuation. Note that conversely, we can recover from the functional equation the formula for the generating series by using the binomial series expansion $(1+w)^\alpha=\sum_{n=0}^\infty\binom{\alpha}{n}w^n$. Note also that $s_{\mu^{\mathrm{SC}}}(z)=\frac{z-\sqrt{z^2-4}}{2}$ is characterized by the quadratic functional equation

\[

s(z)^2-zs(z)+1=0,\quad z\in\mathbb{C}_+,

\] since the other solution $\frac{z+\sqrt{z^2-4}}{2}$ cannot be a Cauchy-Stieltjes transform since we always have

\[

\Im s_\mu(z)\Im z=\int\frac{(\Im z)^2}{|z-x|^2}\mu(\mathrm{d}x)<0.

\] This quadratic equation that characterizes $s_{\mu^{\mathrm{SC}}}$ is used in a proof of the Wigner theorem.

How about probability distributions on $\mathbb{C}$. As explained above, the Cauchy-Stieltjes transform characterizes the probability measures on $\mathbb{R}$. This comes from the fact that $s_\mu(z)$ sees the support of $\mu$ from above, thanks to $\Im z>0$. It does not work for probability measures supported by an arbitrary subset of $\mathbb{C}$. For instance, if $\mu$ is a radially symmetric probability measure supported by a disc $D(0,R)$, then all the complex moments $\int z^n\mathrm{d}\mu(z)$ are zero, and $s_\mu(z)=-1/z$ outside $D(0,R)$. This shows that the knowledge of the value of $s_\mu$ outside the support of $\mu$ is not enough to characterize the measure $\mu$. We can circumvent the problem by considering $s_\mu$ inside the support, which is possible thanks to the local integrability of $1/\left|\cdot\right|$, or by using a quaterniotic Cauchy-Stieltjes transform, that sees the complex plane from above.

Planar potential theory. The Laplacian in $\mathbb{R}^2$ writes

\[

\Delta:=\partial_x^2+\partial_y^2=4\partial_z\partial_{\overline z}=4\partial_{\overline z}\partial_z

\quad\text{where}\quad

\partial_z:=\tfrac{1}{2}(\partial_x-\mathrm{i}\partial_y)

\text{ and }

\partial_{\overline z}:=\tfrac{1}{2}(\partial_x+\mathrm{i}\partial_y).

\] The function $\log\frac{1}{\left|\cdot\right|}$ is the fundamental solution of $-\Delta$ : in the sense of distributions,

\[

(-\Delta)\log\frac{1}{\left|\cdot\right|}=2\pi\delta_0.

\] For a probability measure $\mu$ on $\mathbb{R}$ such that $\mathbb{1}_{\left|\cdot\right|\geq1}\log\left|\cdot\right|\in L^1(\mu)$, the quantity

\[

u_\mu(z):=\Bigr(\mu*\log\frac{1}{\left|\cdot\right|}\Bigr)(z)=\int\log\frac{1}{\left|z-w\right|}\mu(\mathrm{d}w)\in(-\infty,+\infty]

\] called the logarithmic potential of $\mu$ at point $z\in\mathbb{C}$, is well defined for all $z\in\mathbb{C}$. Moreover, since $\log\left|\cdot\right|$ is locally integrable for the Lebesgue measure on $\mathbb{C}\equiv\mathbb{R}^2$, the Fubini-Tonelli theorem shows that $u_\mu$ is locally integrable for the Lebesgue measure, in particular $u_\mu$ is finite almost everywhere for the Lebesgue measure. The map $\mu\mapsto u_\mu$ is an inverse of the Laplacian :

\[

(-\Delta)u_\mu = \mu*\Bigr(-\Delta\log\frac{1}{\left|\cdot\right|}\Bigr)=2\pi\mu.

\] We should see $u_\mu$ as a transfrom of $\mu$ that characterizes $\mu$ :

\[

\mu=\frac{1}{2\pi}(-\Delta)u_\mu=-\frac{2}{\pi}\partial_{\overline z}\partial_z u_\mu.

\] Also, by using $\partial_z\log\left|z\right|=\frac{1}{2}\partial_z\log(zz^*)=\frac{1}{2}\frac{z^*}{zz^*}=\frac{1}{2z}$, we get

\[

\partial_z u_\mu(z)

=-\frac{1}{2}s_\mu(z)

\] where $s_\mu(z)$ is the Cauchy-Stieltjes transform of $\mu$ at point $z$

\[

s_\mu(z)=\int\frac{1}{z-w}\mu(\mathrm{d}w)=2((\partial_z\log\left|\cdot\right|)*\mu)(z).

\] Here again, since $z\mapsto\frac{1}{z}$ is locally integrable on $\mathbb{C}\equiv\mathbb{R}^2$ for the Lebesgue measure, the Fubini-Tonelli theorem gives that $s_\mu$ is locally integrable and is in particular finite almost everywhere. Typically $u_\mu$ and $s_\mu$ can be infinite at the edge of $\mathrm{supp}(\mu)$. We have

\[

|s_\mu(z)|\leq\frac{1}{\mathrm{dist}(z,\mathrm{supp}(\mu))}.

\] This transform generalizes to probability measures on $\mathbb{C}\equiv\mathbb{R}$ the transform defined previously for probability measures on $\mathbb{R}$. It characterizes the measure since in the sense of distributions,

\[

\mu=-\frac{2}{\pi}\partial_{\overline z}\partial_z u_\mu=\frac{1}{\pi}\partial_{\overline z} s_\mu.

\] On the open subset $\mathbb{C}\setminus\mathrm{supp}(\mu)$, the function $s_\mu$ is analytic in other words $\partial_{\overline z} s_\mu=0$.

Higher dimensional potential theory. On $\mathbb{R}^d$, $d\geq1$,

\[

\Delta=\partial_{x_1}^2+\cdots+\partial_{x_d}^2=\mathrm{div}\nabla,

\] the fundamental solution is given by the Newton or Coulomb kernel

\[

(-\Delta)k=c_d\delta_0

\quad\text{where}\quad

k:=\frac{1}{(d-2)\left|\cdot\right|^{d-2}},

\] with the convention $k:=\log\frac{1}{\left|\cdot\right|}$ when $d=2$, and the analogue of the potential $u_\mu$ and the Cauchy-Stieltjes transform $s_\mu$ of a probability measure $\mu$ on $\mathbb{R}^d$ are given by

\[

u_\mu:=k*\mu\quad\text{and}\quad s_\mu:=(\nabla k)*\mu.

\] From this point of view, the Cauchy-Stieltjes transform is a vector field, associated to a potential.

Sign conventions. The reason why we define $s_\mu(z)$ with $1/(z-x)$ instead of $1/(x-z)$ is that it produces in overall nicer formulas, except for the minus sign when linking with the resolvent. The reason why we define $u_\mu(z)$ with $-\log\left|\cdot\right|$ instead of $\log\left|\cdot\right|$ is that it produces a true interpretation as a potential of the non-negative operator $-\Delta$.

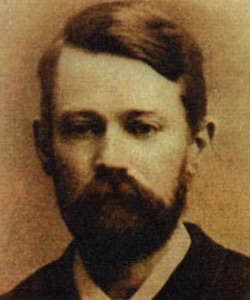

Final words. Thomas-Joannes Stieltjes used this transform, related to the more traditional Cauchy transform, to study the moments characterization problem for probability distributions, and its link with continued fractions. This transform is nowadays an essential tool of Random Matrix Theory. At that time, he was a professor at the University of Toulouse, a position obtained with the help of Charles Hermite, with whom he maintained a correspondence.

Personal. One century later, I was a student at the University of Toulouse, attending courses in the Stieltjes amphitheater, the other one for mathematics was named after Pierre de Fermat. My first contacts with the mathematics of Stieltjes, as a student, were for the Lebesgue-Stieltjes integral during a course of Claude Wagschal on measure theory, and later on for the moments problem during a course of Dominique Bakry for the préparation à l'agrégation. Also it was a great pleasure for me to meet again Stieltjes, ten years later, when studying the moments problem as a researcher in statistics, and fifteen years later when studying random matrices!

Acknowledgements. This post benefited from the feedback of Arup Bose, who pointed out a glitch.

- Stieltjes, T.-J.

Recherches sur les fractions continues [ suite et fin ]

Annales de la Faculté des sciences de Toulouse pour les sciences mathématiques et les sciences physiques. Série 1, Tome 9 (1895) no. 1, pp. A5-A47. - On this blog

Lettre de Hermite à Markov (8 mai 1895)

17 june 2010 - On this blog

Éternelle humanité (correspondance Hermite-Stieltjes)

20 june 2010 - Geronimo, Jeffrey S. and Hill, Theodore P.

Necessary and sufficient condition that the limit of Stieltjes transforms is a Stieltjes transform

J. Approx. Theory 121 (2003), no. 1, 54–60.