Let us fix \( {d\geq1} \). Let \( {E_{d,n}} \) be the set of paths of the simple random walk of \( {\mathbb{Z}^d} \), with \( {2n} \) steps, starting and ending at the origin of \( {\mathbb{Z}^d} \). In other words, \( {\gamma=(\gamma_0,\ldots,\gamma_{2n})\in(\mathbb{Z}^d)^{2n}} \) belongs to \( {E_{d,n}} \) iff \( {\gamma_0=\gamma_{2n}=0} \), and \( {\gamma_{i}-\gamma_{i-1}\in\{\pm e_1,\ldots,\pm e_d\}} \) for every \( {1\leq i\leq 2n} \), where \( {e_1,\ldots,e_d} \) is the canonical basis. We have

\[ \mathrm{Card}(E_{d,n}) = \sum_{2k_1+\cdots+2k_d=2n}\binom{2n}{k_1,k_1,\ldots,k_d,k_d} =\binom{2n}{n}\sum_{k_1+\cdots+k_d=n}\binom{n}{k_1,\ldots,k_d}^2. \]

How to simulate the uniform law on the finite set \( {E_{d,n}} \)?

Case \( {d=1} \). The cardinal of \( {E_{1,n}} \) is huge:

\[ \mathrm{Card}(E_{1,n})=\binom{2n}{n}\sim\frac{2^{2n}}{\sqrt{n\pi}}. \]

To simulate the uniform law on \( {E_{1,n}} \), it suffices to generate uniformly \( {n} \) positions among \( {2n} \) positions, which can be done by using a random uniform permutation on the vector \( {V=(1,\ldots,1,-1,\ldots,-1)} \) which contains \( {n} \) symbols \( {+1} \) and \( {n} \) symbols \( {-1} \). This produces a random element \( {\Gamma} \) of \( {E_{1,n}} \), and for every \( {\gamma\in E_{1,n}} \), denoting \( {\Sigma} \) the set of permutations of \( {\{1,\ldots,2n\}} \) sending the \( {\pm 1} \)'s of \( {V} \) to the positions of the \( {\pm 1} \)'s in the edges of \( {\gamma} \),

\[ \mathbb{P}(\Gamma=\gamma) =\sum_{\sigma\in\Sigma}\frac{1}{(2n)!} =\frac{n!n!}{(2n)!}=\frac{1}{\mathrm{Card}(E_{1,n})}. \]

The complexity of this algorithm is linear in \( {n} \). Here is the pseudo-code:

V = [ones(1,n),-ones(1,n)]

GAMMA = cumsum([0,V(randperm(2n))])

Case \( {d=2} \). Using the Vandermonde binomial convolution formula, we find

\[ \mathrm{Card}(E_{2,n}) =\binom{2n}{n}\sum_{k}\binom{n}{k}^2 =\binom{2n}{n}^2\sim\frac{4^{2n}}{n\pi}. \]

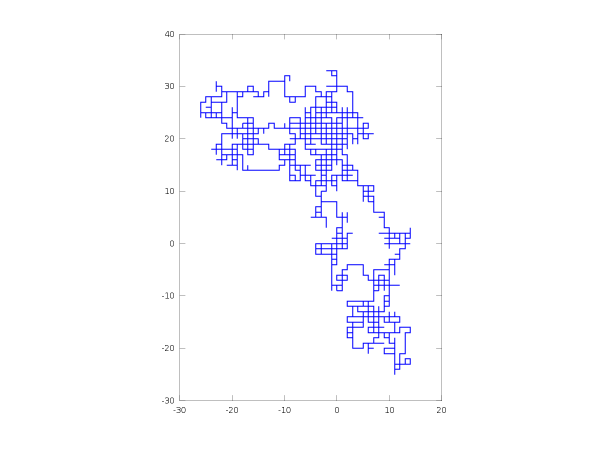

Let us use the fact that \( {{(Z_n)}_{n\geq0}={(X_n,Y_n)}_{n\geq0}} \) is a simple random walk on \( {\mathbb{Z}^2} \) iff \( {{(X_n-Y_n)}_{n\geq0}} \) and \( {{(X_n+Y_n)}_{n\geq0}} \) are two independent simple random walks on \( {2\mathbb{Z}} \). Moreover, \( {Z_n=(0,0)} \) iff \( {X_n-Y_n=0} \) and \( {X_n+Y_n=0} \). Thus, if \( {\gamma_1} \) and \( {\gamma_2} \) are independent and uniform on \( {E_{1,n}} \) then \( {(\gamma_1-\gamma_2,\gamma_1+\gamma_2)/2} \) is uniform on \( {E_{2,n}} \). This gives again an algorithm with a linear complexity in \( {n} \). Here is the pseudo-code:

V = [ones(1,n),-ones(1,n)]

A = cumsum([0,V(randperm(2n))/2])

B = cumsum([0,V(randperm(2n))/2])

GAMMA = [A-B;A+B]

General case \( {d\geq1} \). One may compute \( {C_{d,n}=\mathrm{Card}(E_{d,n})/\binom{2n}{n}} \) recursively:

\[ C_{d,n}=\sum_{k=0}^n\binom{n}{k}^2C_{d-1,n-k}. \]

For \( {d\geq3} \), no simple closed formula seems to be known for \( {C_{d,n}} \), see the book Problems in applied mathematics (problem 87-2 page 149). Let \( {\gamma} \) be an element of \( {E_{d,n}} \), and let \( {k_1,\ldots,k_d} \) be the number of \( {e_1,\ldots,e_d} \) steps. Since the path starts and ends at the same point, this is also the number of \( {-e_1,\ldots,-e_d} \) steps, and \( {k_1+\cdots+k_d=n} \). To make \( {\gamma} \) random and uniform, we may first generate \( {(k_1,\ldots,k_d)} \) with probability

\[ p_{k_1,\ldots,k_d} =\frac{\binom{n}{k_1,\ldots,k_d}^2}{C_{d,n}} \]

then create the vector

\[ V=(e_1,\ldots,e_1,\ldots,e_d,\ldots,e_d,-e_1,\ldots,-e_1,\ldots,-e_d,\ldots,-e_d) \]

where for each \( {1\leq i\leq d} \), both \( {e_i} \) and \( {-e_i} \) appear \( {k_i} \) times, and finally permute this vector with a random uniform permutation. This gives a random \( {\Gamma} \) in \( {E_{d,n}} \) such that for every \( {\gamma\in E_{d,n}} \) with \( {k'_i} \) the number of \( {e_i} \) in \( {\gamma} \), and \( {\Sigma} \) the set of permutations of \( {\{1,\ldots,2n\}} \) sending the \( {\pm e_i} \)'s of \( {V} \) to the positions of the \( {\pm e_i} \)'s in the edges of \( {\gamma} \),

\[ \mathbb{P}(\Gamma=\gamma) =\sum_{\sigma\in\Sigma}\frac{p_{k_1',\ldots,k_d'}}{(2n)!} =\frac{k_1'!^2\cdots k_d'!^2}{(2n)!}p_{k_1',\ldots,k_d'} =\frac{1}{\mathrm{Card}(E_{d,n})}. \]

This algorithm, valid for any \( {d\geq1} \), coincides with the one used above for \( {d=1} \), but differs from the one used above for \( {d=2} \) (which was based on a special property). Here is the pseudo-code (does not include the implementation of randk):

K = randk(n,d)

t = 0

for i=1 to d do

if K(i)\( {>} \)0 then

V(t+1:t+K(i),i) = ones(K(i),1)

t = t+K(i)

endif

endfor

V = [V;-V]

GAMMA = cumsum([zeros(1,d);V(randperm(2n),:)])

Note. This algorithm reduces the problem to the implementation of randk, for which I do not have an elegant solution for now when \( {d\geq3} \), apart using the classical algorithm for discrete laws, or a rejection method, or a Metropolis-Hastings approximate algorithm. It is maybe possible to reduce the problem to the simulation of the standard multinomial distribution. The vector \( {(k_1,\ldots,k_d)} \) belongs to the discrete simplex

\[ \{(k_1,\ldots,k_d)\in\{0,1,\ldots,n\}:k_1+\cdots+k_d=n\}, \]

and the cardinal of this set is \( {\binom{n+d-1}{n}} \) (the number of multinomial coefficients). As for the standard multinomial distribution, the largest value of \( {p_{k_1,\ldots,k_d}} \) is achieved when \( {k_1,\ldots,k_d} \) are such that \( {\lfloor n/d\rfloor \leq k_i\leq \lceil n/d\rceil} \) for every \( {1\leq i\leq d} \) (central multinomial coefficients).

Note. The analysis of the coupon collector problem indicates that when \( {d\gg1} \) and \( {n\gg d\log(d)} \) then almost all the \( {k_i} \)'s are non zero.

Note. To solve the more general random walk bridge problem in which the starting point \( {x} \) and the ending point \( {y} \) are not the same, one may think of adding \( {|y_i-x_i|} \) occurrences of \( {\mathrm{sign}(y_i-x_i)e_i} \) in the recipe.

Problem. Solve the non symmetric case (step \( {e_i} \) with probability \( {p_{e_i}} \)).