Generating random permutations following the uniform law on the symmetric group \( {S_n} \) is useful in many concrete situations. Various simulation algorithms are available. At the time of writing, the randperm function of Octave/Freemat/Matlab is implemented as

\[ \mathrm{[junk,perm]=sort(rand(1,n))} \]

This algorithm is based on the following fact: if \( {U_1,\ldots,U_n} \) are i.i.d. uniform random variables on \( {[0,1]} \) (one may replace the uniform law by any other law without atoms) then the random permutation which sorts them is distributed according to the uniform law on \( {S_n} \). The computational complexity of the algorithm is roughly the complexity of the quicksort which is \( {O(n\log(n))} \) with high probability and \( {O(n^2)} \) in the worst case. By the way, the randomized version of quicksort is analyzed in the book by Motwani and Raghavan. It is quite fun to realize that in order to produce a disorder, we reorder a disorder.

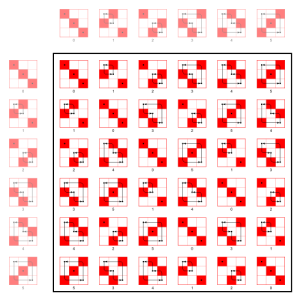

Actually, one can get rid of the logarithmic factor: there exist \( {O(n)} \) algorithms producing uniform random permutations. For instance, one may pick without replacement the \( {n} \) images of the permutation. Unfortunately, this method is difficult to implement since we have to keep track of the already chosen elements, and this may have a cost. Alternatively, one may also use the Chinese restaurant process. Recall that the Chinese restaurant process (with parameter \( {\theta=1} \)) consists at step \( {k} \) either to add \( {k} \) to an existing cycle (dealing with \( {\{1,\ldots,k-1\}} \)) with a probability proportional to its length, or to create a new cycle of unit length with probability \( {1/k} \). Starting from the permutation \( {(1)\cdots(n)} \) with \( {n} \) fixed points, this corresponds to chose \( {i} \) uniform over \( {\{1,\ldots,k\}} \) and to compute the transposition \( {(i,k)} \). Here \( {(i,k)} \) is the identity if \( {i=k} \), and we recall that the effect of a multiplication with the transposition \( {(i,k)} \) is either the merge of the cycles containing \( {i} \) and \( {k} \) if they were disjoint or the split of the cycle containing both \( {i} \) and \( {k} \) if they belong to the same cycle. Here since \( {k} \) belongs to a cycle of length \( {1} \), we add it to the cycle containing \( {i} \) with probability \( {(k-1)/k} \). Therefore, the algorithm reduces to compute the product of transpositions

\[ (1U_1)\cdots(nU_n) \]

where \( {U_1,\ldots,U_n} \) are independent random variables with \( {U_k} \) uniform on \( {\{1,\ldots,k\}} \). This algorithm, also known as the Fisher-Yates or Knuth shuffle, is very simple to implement:

\[ \begin{array}{rcl} &&\mathrm{for\ k\ from\ 1\ to\ n\ do\ v[k]:=k;\ end} \\ &&\mathrm{for\ k\ from\ n\ downto\ 2\ do\ i:=rand(1,k);\ swap(v[i],v[k]);\ end} \end{array} \]

This is the algorithm used at the time of writing by the grand generator of Scilab via the Fortran 77 routine genprm. Beware that this algorithm is not competitive if simply implemented in an interpreted language (that explains the sort(rand) trick above). Note that the Chinese restaurant process has also the ability of producing almost for free the cycles decomposition. With an arbitrary \( {\theta} \), it allows more generally to simulate random permutations on \( {S_n} \) following the Ewens law with parameter \( {\theta} \) (we recover the uniform law on \( {S_n} \) when \( {\theta=1} \)). The Ewens law appears as a Boltzmann-Gibbs measure on \( {S_n} \) with inverse temperature \( {-\log(\theta)} \) and energy equal to the number of cycles.

There exists approximate Monte Carlo Markov Chains (MCMC) methods, based on an aperiodic and irreducible Markov chain on \( {S_n} \) admitting the uniform law on \( {S_n} \) as an invariant law. For instance, one may use the random walk on \( {S_n} \) with i.i.d. increments distributed according to the uniform law on transpositions. This Markov chain on \( {S_n} \) features an \( {n\log(n)} \) cutoff in total variation as shown by Diaconis and Shahshahani. One may also use the top to random shuffle Markov chain which features also an \( {n\log(n)} \) cutoff related to the coupon collector, see e.g. Aldous and Diaconis. More examples are considered in an article by Diaconis and Fill and Pitman and in the excellent relatively recent book by Levin and Peres and Wilmer.

The Steinhaus-Johnson-Trotter algorithm is also based on transpositions.

Note: this post benefited from discussions with many people at various occasions, including Laurent Miclo, Edouard Maurel-Segala, and Yan Doumerc.

1 Comment