La formation à distance s’impose à tous avec brutalité en ces temps de lutte contre l’épidémie de coronavirus. Le numérique n’est pas une fin en soi. C’est un moyen pour soutenir le distanciel, qui a ses avantages et ses inconvénients. Ce billet vise à promouvoir l’idée d’une pédagogie à distance inversée, numérique et frugale, qui prend son temps, et qui n’en fait pas trop.

Avertissement. Ce billet concerne surtout la formation des adultes à l’université voire en master.

Précarité. Certains étudiants sont dans une situation précaire, peu propice au travail, ou sont mal équipés. Cela concerne également certains enseignants, notamment des doctorants.

Connectivité. Certains étudiants ou enseignants ont une connectivité inexistante ou faible, qui passe bien souvent par un abonnement mobile avec un forfait data limité. D’autre part, la montée en puissance du télétravail pèse sur les serveurs des établissements d’éducation, mais aussi sur ceux des géants américains du numérique, les GAFAM, surtout sur G et M avec leurs bouquets de services G Suite et Office 365 respectivement.

Frugalité. Dans ce contexte de tension et de saturation, la promotion de la frugalité numérique s’impose comme une évidence. Cela passe notamment par la modération de l’usage de la vidéo pour la pédagogie ou la conférence en ligne. Rappelons-le, la vidéo consomme beaucoup plus que l’audio, qui consomme beaucoup plus que la messagerie texte. La frugalité numérique s’inscrit naturellement dans une démarche plus générale de consommation raisonnée des ressources. La frugalité rend le numérique plus éthique, plus durable, plus responsable.

Décrochage. Il est bien connu que la formation à distance s’accompagne d’un risque de décrochage élevé : perte de motivation, découragement, … Ce phénomène est aussi ancien que la formation à distance, et ceux qui ont travaillé ou étudié au Centre National d’Enseignement à Distance (CNED) connaissent bien le problème. Les moyens de lutte contre le décrochage distanciel sont également connus. Un planning précis et un suivi rigoureux en font partie.

Charge. Un autre travers dans lequel peut facilement tomber le formateur à distance est celui de donner trop de travail aux étudiants. Bien doser la charge de travail est un art délicat, qui, idéalement, se pratique dynamiquement en tenant compte de l’évolution de chaque étudiant.

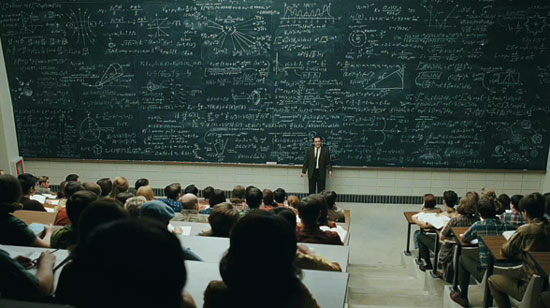

Inversion. Plutôt que de consacrer le temps d’interaction avec les étudiants à une transmission essentiellement unidirectionnelle du savoir, il est possible de leur fournir du matériel pédagogique accessible qu’ils étudient sans l’enseignant, à leur rythme, et de consacrer le temps d’interaction à répondre à leurs questions. C’est la pédagogie inversée : les cours à la maison et les devoirs en classe. Cela nécessite en pratique la préparation d’un matériel pédagogique conséquent et adapté. La pédagogie inversée s’oppose au cours magistral en présentiel, mais pas au cours magistral enregistré. Elle est compatible avec l’animation de travaux dirigés, et peut même gagner en puissance lorsque les corrigés des exercices sont fournis à l’avance.

Lenteur. Le monde actuel est rapide, réactif, instantané, zappeur, synchrone, et l’omniprésence du numérique a sa part de responsabilité dans cet état de fait. Or l’apprentissage nécessite bien souvent une concentration, une profondeur, une statique, un asynchronisme, qui tirent plutôt vers la lenteur. Restaurer un peu de lenteur pour la pédagogie aujourd’hui passe par la régulation du caractère instantané de certains usages courants du numérique. Cela revient à promouvoir une forme de qualité au détriment d’une forme de quantité.

Teams. Avec Microsoft Teams, la tentation est grande pour les enseignants de coller le plus possible à leur pratique antérieure en présentiel, en utilisant essentiellement la visioconférence. Tentons au contraire de détailler une mise en œuvre possible d’une pédagogie inversée numérique frugale avec une équipe Teams. L’usage de canaux pour les discussions de messagerie entre étudiants et enseignants est utile, au format texte, et de temps en temps audio ou vidéo. Des canaux privés peuvent naturellement accueillir les discussions entre enseignants d’une part, et entre étudiants d’autre part. La mise en place d’une visioconférence chaleureuse au début du cours pour expliquer les règles du jeu aux étudiants est utile également. Dans les fichiers électroniques partagés, des notes de cours, des exercices, et surtout un planning détaillé. Plutôt que d’utiliser beaucoup de visioconférence pour un cours en mode synchrone, dont le rythme sera de toute façon toujours trop ou pas assez élevé, mieux vaut peut-être consacrer ce temps à rédiger les corrigés des exercices, à suivre les étudiants, à échanger en ligne avec eux par messagerie, et cela n’empêche pas un peu d’audio ou de vidéo régulièrement. Les enseignants les plus cadrants peuvent même organiser de courts rendez-vous de suivi, réguliers et obligatoires pour les étudiants, afin de juguler le décrochage.

Les enseignants on développé de nombreuses pratiques. Certains partagent leur écran pour commenter un PDF/PPT et pointer à la souris, d’autres se filment écrivant au tableau ou sur du papier, parfois au moyen d’un support flexible pour smartphone, tandis que d’autres encore disposent d’une tablette avec stylet (avec ou sans écran). Disposer d’un stylet permet de mettre à profit le tableau blanc partagé de Teams, ou un partage d’écran de OneNote ou de xournalpp, ce que certains apprécient pour les dessins et formules mathématiques. Les applications GoodNotes et Notability ont du succès sur Apple iPad.

Vidéo. En visioconférence collective, il est fréquent qu’on demande aux participants non-actifs de couper leur caméra pour améliorer la qualité. Or en principe, seuls ceux qui ont une mauvaise connexion doivent le faire, et doivent également couper la vidéo entrante, ce qui est possible avec Teams via les «…» du cartouche central. Les autres ont beaucoup à gagner à maintenir leur caméra pour rendre le moment plus chaleureux. Par ailleurs, il est toujours utile d’identifier le maillon faible dans sa chaîne de connexion à Internet : c’est parfois la liaison wifi à la box Internet, et dans ce cas, il est utile de se déplacer ou de se rapprocher.

Dauphine. Plusieurs de mes collègues ont mis en œuvre des variantes de ce qui est décrit ici.

Libre. Il est possible d’imiter un peu Teams par un couplage de Mattermost avec Jitsi ou BBB.

Personnel. J’ai suivi durant mon adolescence l’enseignement à distance dispensé par le CNED. C’était avant la diffusion massive de l’informatique et d’Internet dans le grand public, avec son world wide web, sa visioconférence, et ses messageries instantanées. Tout passait à l’époque par le courrier postal, à des années lumières de Teams. On recevait le matériel papier et un planning au début. Il y avait régulièrement des devoirs à rendre qui étaient corrigés et commentés par les enseignants. L’aller-retour prenait des semaines, un autre temps. Je n’étais pas majeur, mes parents veillaient de près ou de loin à ce que le décrochage ne s’installe pas.

À propos du lycée, de la maturité, et en oubliant un instant la frugalité :

À lire également.

- Manager ses équipes à distance, formation accélérée en cette période de covid-19, par Miguel Membrado, qui préfère parler de travail en mobilité plutôt que de télétravail.