It is a great time for scientific conferences over the Internet, a discovery for many colleagues and communities. Ideally, such events should be organized using software platforms implementing virtual reality, just like for certain video games, with virtual buildings, virtual rooms, virtual characters, virtual discussions, virtual restaurants, etc. Unfortunately, such platforms do not seem to be available yet with the expected level of quality and flexibility, even if I have heard that some colleagues from PSL University managed to organize a virtual poster session using a software initially designed for virtual museums!

Online scientific conferences are very easy to organize, cost almost nothing, and have an excellent carbon footprint in comparison with traditional face-to-face on-site conferences. The carbon footprint of Internet is not zero, but the fraction that is used for an online scientific event has nothing to do with what is used for an on-site conference with plenty of participants coming from far away by airplanes. The main drawback of online scientific events is of course the limited interactions between participants, and the fact that they do not extract the participants from their daily life and duties. Online conferences are an excellent way to maintain the social link inside scientific communities. The term webinar/webconference is sometimes used but emphasizes the web which is not the heart of the concept.

It would be unfortunate to make all scientific conferences online. The best would be to reduce the number of traditional conferences, and try to increase their quality, for instance by asking participants to stay longer. Also I would not be surprised to see the development of blended or hybrid conferences mixing on-site participants and remote online participants.

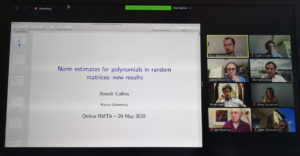

I had recently the opportunity to co-organize with a few colleagues an online scientific conference on Random Matrices and Their Applications, in replacement of a conventional on-site face-to-face conference in New York canceled due to COVID-19. The initial conference was supposed to last a whole week, with about thirty talks and a poster session. For the online replacement, we have decided to keep the same week. About twelve of the initial speakers accepted to give an online talk. For simplicity, we have then decided to put three 45 minutes talks per day on Monday, Tuesday, Thursday, and Friday, and to give up the poster session. We have used the same schedule for each day, with a first talk at 10 am New York local time. We had between 80 and 150 participants per talk, from all over the world. In short:

- Schedule. Few talks per day, compatible with as many local times as possible

- Website. Speakers, titles, abstracts, slides, registration

- Talks. To improve speakers experience, turn-on few cameras along the talks, typically the chairperson/organizers/coauthors. The integrated text chat can be used for questions

- Workspace. An online collaborative workspace in parallel is useful. A private channel can host the discussions between organizers, replacing emails, a general channel can host the interaction with the speakers and the participants, and between them, etc.

On the technical side, we have decided to use current social standards instead of best quality solutions, namely Dokuwiki for the website, Zoom for the talks, and Slack for the workspace.

1 Comment